This post was adapted from a talk called "String Theory", which I co-presented with James Edward Gray II at Elixir & Phoenix Conf 2016. I originally posted it on the Big Nerd Ranch blog. My posts on Elixir and IO Lists (here and here) were also part of that talk.

In my first post on Unicode and UTF-8, I showed you the basis of Elixir's great Unicode support: every string in Elixir is a series of codepoints, encoded in UTF-8. I explained what Unicode is, and we walked through the encoding process and saw the exact bits it produces.

For this post, what's important to know is that UTF-8 represents codepoints using three kinds of bytes. Larger codepoints get a leading byte followed by one, two or three continuation bytes, and the leading byte tells how many continuation bytes we should expect. Smaller codepoints just get a single solo byte.

Each kind of byte has a distinct pattern, and by using those patterns, Elixir can do a lot of things correctly that some other languages mess up, like reverse a string without breaking up its characters. Let's see how.

Reversing a UTF-8 String

Suppose we wanted to reverse the string "a™". Elixir represents that string as a binary with four bytes: the "a" gets a solo byte, and the "™" gets three bytes (a leading byte and two continuation bytes).

For simplicity's sake, we can picture "a™" like this:

SoloFirst of 3ContinuationContinuation

You wouldn't want to reverse it like this, scrambling the multi-byte "™":

ContinuationContinuationFirst of 3Solo

Instead, you'd want to reverse it like this, keeping the bytes for "™" intact:

First of 3ContinuationContinuationSolo

Elixir does this correctly because, thanks to using UTF-8, it can tell which bytes should go together. That's also what lets it correctly measure the length of a string, or get substrings by index: because it knows which bytes go together, it knows whether (for example) the first three bytes express one character or three.

Well, that's part of it. There's one more layer to consider.

Grapheme Clusters

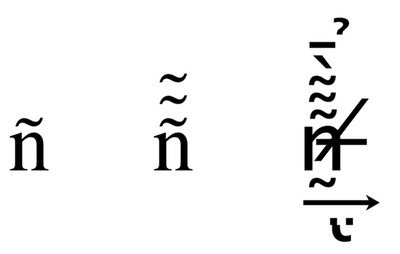

Not only can we have multiple bytes in one codepoint; we can also have multiple codepoints in one "grapheme". A grapheme is what most people would consider a single visible character, and in some cases, what looks like "a letter with an accent mark" may be composed of a "plain" letter followed by a "combining diacritical mark" - which says, "hey, put this mark on the previous letter". A series of codepoints that represent a single grapheme is called a "grapheme cluster."

noel = "noe\u0308l" # => "noël"

String.codepoints(noel) # => ["n", "o", "e", "̈", "l"]

String.graphemes(noel) # => ["n", "o", "ë", "l"]Notice that Elixir lets us ask for either the codepoints or the graphemes in that string.

Now, you might wonder: if I can add one mark to a letter, can I add two? How many can I add?

The answer is: you can add a boatload of them!

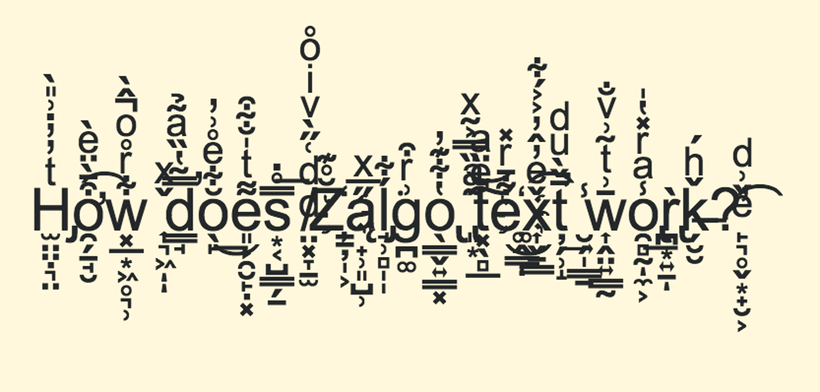

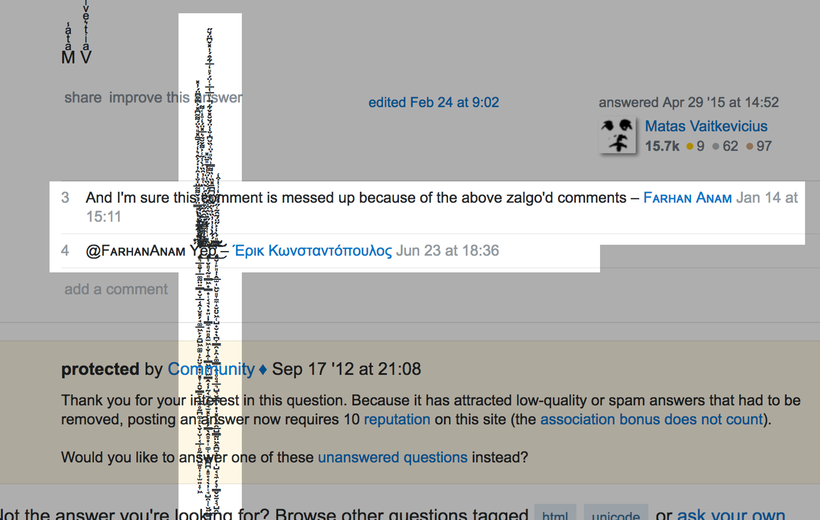

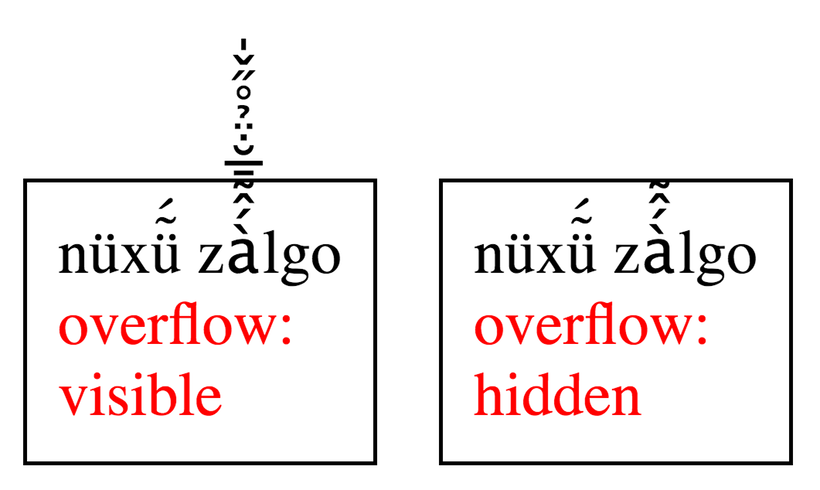

You may have seen "zalgo text," where some poor website's text box is overflowed with horrible-looking characters, and they think they've been hacked. You may have seen questions on Stack Overflow asking how to keep people from putting this junk on your web site. And you may have seen snarky responses that break the Stack Overflow comment section.

Because unfortunately, there's no easy way to prevent people from putting this on your site. Zalgo text is not a bug. It's a (misused) feature.

Remember, Unicode is trying to cover all of human language. It supports writing from left to right or right to left, and it supports special Javanese punctuation which "introduces a letter to a person of older age or higher rank".

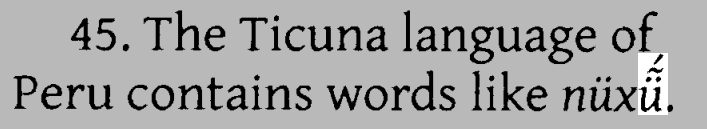

And it turns out that some languages need multiple combining marks per character - like the Ticuna language of Peru, which is a tonal language and uses the marks to indicate tones.

I mean, you could screen out all combining marks, but that would break a lot of Unicode text. You could screen out any characters with more than one combining mark, but you don't want to outlaw the Ticuna in an attempt to control your page layout. Could you limit it to 5? Maybe, if you don't mind alienating users from Tibet, who may use 8 or more combining marks.

A simpler solution would be to just declare "this text isn't allowed to overflow its container."

Anyway, the fact that multiple codepoints can be one grapheme—like an "e" codepoint followed by an "accent" codepoint—means that the string reversal I showed earlier wasn't quite right. To properly reverse a string, you have to first group the bytes into codepoints, then group the codepoints into graphemes, and then reverse those.

"noël" |> String.codepoints |> Enum.reverse # => "l̈eon"

"noël" |> String.graphemes |> Enum.reverse # => "lëon"

The latter is essentially what String.reverse does; the former is a common way that programming languages do it wrong.

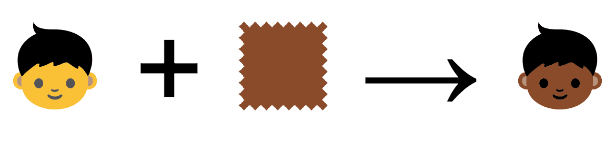

By the way, emoji can also be created using multiple codepoints. One example of this is skin tone modifiers:

👍🏿 👍🏾 👍🏽 👍🏼 👍🏻

Each of those is a "thumbs up" codepoint (👍) , followed by a skin tone codepoint (like 🏿 ). This kind of thing is supported for various human codepoints.

There are plans to similarly interpret things like "police officer + female sign" as "female police officer," which is nice.

Unicode also supports drawing a family by combining people emojis with the "zero width joiner" character, but in my opinion, this is insane. No font can support every possible configuration of a family—number, age and gender of adults and children, and skin tone of each family member—and give each combination its own character. If a group of individual emoji doesn't cut it, it looks to me like a job for the

Anyway, to recap: we can have multiple UTF-8 bytes for one Unicode codepoint, and multiple codepoints can form one grapheme.

Gotchas

All this leads to a few little gotchas for programmers.

First, when checking the "length" of a string, you have to decide what you mean. Do you want the number of graphemes, codepoints, or bytes? String.length in Elixir will give you the number of graphemes, which is how long the string "looks". If you want byte_size or a count of codepoints, you'll have to be explicit about that.

And while byte_size is an O(1) operation, String.length has to walk through the whole string, grouping bytes into codepoints and codepoints into graphemes, so it's O(N). (If you don't understand these terms, see my article on Big O notation.)

Second, string equality is tricky. This is because there can be more than one way to build a given visible character; for example, the accented "e" in "noël" can be written as an "e" followed by a combining diacritical mark. This way of building it has to exist, because Unicode supports adding dots to any character. However, because "ë" is a common grapheme, Unicode also provides a "precomposed character" for it—a single codepoint that represents the whole grapheme.

A human can't see any difference between "noël" and "noël", but if you compare those two strings with ==, which checks for identical bytes, you will find that they're not equal. They have different bytes, but the resulting graphemes are the same. In Unicode terms, they are "canonically equivalent."

If you don't care about the difference between "e with an accent" spelled as one codepoint or two, use String.equivalent?/2 to ignore it - that function will normalize the strings before comparing them.

{two_codepoints, one_codepoint} = {"e\u0308", "\u00EB"} # => {"ë", "ë"}

two_codepoints == one_codepoint # => false

String.equivalent?(two_codepoints, one_codepoint) # => trueFinally, changing case can be tricky, even though it's essentially a (heh heh) case statement that's run once per grapheme: "if you get 'A', downcase it as 'a', if 'B', as 'b'...".

Elixir mostly gets this right:

String.downcase("MAÑANA") == "mañana"But human language is complicated. You think you have a simple thing like downcasing covered, then you learn that the Greek letter sigma ("Σ") is supposed to be "ς" at the end of a word and "σ" otherwise.

Even Elixir doesn't bother to support that; it downcases one grapheme at a time, so it can't consider what comes after the sigma. If it really matters to you, you can write your own GreekAware.downcase/1 function.

One last thing: an Elixir regex can can tell Unicode letters from non-alpha characters, but you have to pass the /u flag if you want it to.

#!elixir

String.replace("&ñá#śçôλ!", ~r/[^[:alpha:]]/u, "") # => "ñáśçôλ"But How Do I Type It?

So, suppose you're browsing the Unicode code charts (as one does) and you come across something you want to put in an Elixir string (like "🂡""). How do you do it?

Well, you can just type or paste it directly into an Elixir source file. That's not true for every programming language.

But if you want or need another way, you can use the codepoint value like this:

# hexadecimal codepoint value

"🂡" == "\u{1F0A1}"

# decimal codepoint value

"🂡" == <<127_137::utf8>>

If you read my first article on Unicode, you can understand what the ::utf8 modifier does: it encodes the number as one or more UTF-8 bytes in the binary, just like we did there with ⏰.

Congratulations! You're a 💪er 🤓 than ever!

Update 2023-06-21

I saw this cool post and adapted the code a bit to provide a function which analyzes a string:

- It breaks a string into its graphemes (visible characters)

- It breaks those graphemes into Unicode code points

-

It shows the code point (an integer - these are 1..126 for ASCII characters - see "the decimal set" in

man ascii- and arbitrarily high for others) - It shows the UTF-8 bytes which encode that code point in the string

For instance: Bits.analyze_string("aë™🍠👍🏻") outputs th:

[

{"a", [{"a", 97, "01100001"}]},

{"ë", [{"ë", 235, "11000011 10101011"}]},

{"™", [{"™", 8482, "11100010 10000100 10100010"}]},

{"🍠", [{"🍠", 127840, "11110000 10011111 10001101 10100000"}]},

{"👍🏻",

[

{"👍", 128077, "11110000 10011111 10010001 10001101"},

{"🏻", 127995, "11110000 10011111 10001111 10111011"}

]}

]Here's the code:

defmodule Bits do

def analyze_string(string) do

string

|> String.graphemes()

|> Enum.map(fn grapheme ->

{grapheme, Enum.map(String.codepoints(grapheme), fn codepoint ->

{codepoint, unicode_number(codepoint), utf_8_bits(codepoint)}

end)}

end)

end

def unicode_number(codepoint) do

[val] = String.to_charlist(codepoint)

val

end

def utf_8_bits(binary) do

for(<>, do: "#{x}")

|> Enum.chunk_every(8)

|> Enum.join(" ")

end

end